Technology exists to support the business goals of an organization. As advanced as technology gets over time, it still needs to be supported. Issues and complexity exist and need to be tended to, often on a daily basis. For general information technology; a help desk, service desk, or operations center is there to receive and triage general issues. Often, this group is the initial point of contact for all IT related issues. An adjacent support function to the help desk, is the network operations center (NOC). The main function of the NOC is to monitor the network infrastructure to ensure that all devices and links are online and performing at optimal levels. When issues arise, the NOC will triage and troubleshoot those issues, escalating to other individuals when necessary. From a security perspective, we have the option of a similar group, called the security operations center (SOC). The main functions of a SOC are to monitor the organization for threats and events, then act on those by investigating, analyzing, and escalating them. That being stated though, just like many other aspects, there is no “one size fits all” when it comes to the types and deployment models of a security operations center.

SOC Types

There are three main types of security operations centers: threat-centric, compliance-based, and operational-based. A threat-centric SOC focuses on seeking out threats and malicious activity on the network. Threat-centric SOCs can be prepared for this by leveraging threat intelligence sources, staying updated on new and existing vulnerabilities, and understanding baseline traffic within the organization’s network so that they understand what specifically is anomalous behavior. A compliance-based SOC takes a different approach. The focus of a compliance-based SOC is to ensure that the organization stays in compliance of regulatory standards. They can do this by understanding those regulations and comparing them to the operations and posture of the organization to ensure compliance. Finally, an operational-based SOC focuses on understanding internal business operations to be able to protect it. Focuses of the operational-based SOC may include identity and access management and maintaining intrusion detection system (IDS) rules as well as firewall rules.

SOC Deployment Models

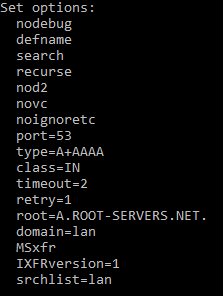

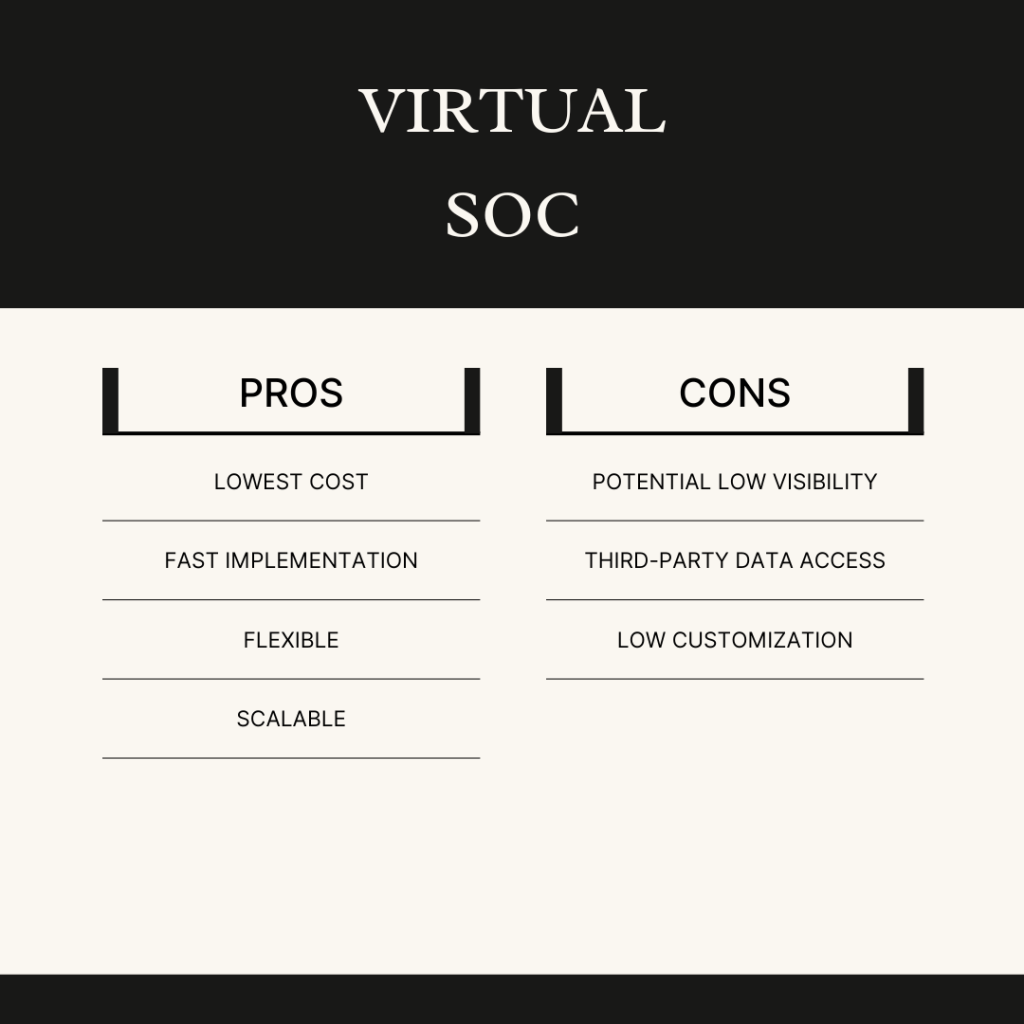

Just like there are multiple types of security operations centers, there are also multiple ways in which a SOC can be deployed. There are three main SOC deployment models: internal, virtual (vSOC), and hybrid. An internal SOC is built and operated by the organization itself. It is staffed and run by employees internal to the organization. For organizations who have or are able to recruit and retain talented SOC individuals and either cannot or do not desire to allow third-party companies to have access to their data, the internal SOC is a fit. The virtual (vSOC) deployment model is a contracted service. All SOC operations are handled by a third-party business. The vSOC model makes sense for organizations who do not have the staff to handle the internal SOC model and they are able to allow a third-party to have access to their data. Finally, you guessed it, the hybrid SOC deployment model is a combination of the internal and vSOC models. This model is a fit for organizations who want to augment their internal staff with external expertise and coverage as well. See the images below for a look at the pros and cons of the three different SOC delivery models.

Rounding it Out

A security operations center can be crucial to the security and continued operations of a business. A SOC keeps a watchful eye over an organization by detecting and handling incidents and events. There are multiple ways a SOC can operate and be deployed. SOC types include: threat-centric, compliance-based, and operational-based, while the deployment models include: internal, virtual (vSOC), and hybrid.

Sources: I gained this information by going through the “Understanding Cisco Cybersecurity Operations Fundamentals | CBROPS” course on Cisco U.